These are some of the consequences of leaving behind the QA architecture:

- The framework not easily extensible

- The high workload in maintenance

- Flaky tests

- Additional manual testing effort to isolate the root cause of failed tests

Framework Not Easily Extensible

What if for some stakeholder’s decision now it’s mandatory to take a screenshot of every step that automated framework runs?

To accomplish that feature would be a couple of approaches, the hard and the easy one. The hard part is going to every single script in the framework, and then apply the logic before/after each action, it depends on the needs. It will be mandatory to call a new function to take the screenshot but just think about the amount of time to achieve that code modification.

Now, on the other hand, thinking in an easier way, it is just going to the BasePage class, and in every method where you map the action that can be performed with the elements in the screen, add the calling to the new take screenshot method, as simple and as easy as touch couple of methods.

But, what if someone else after a month of this new implementation starts saying that there are too many screenshots, and it has created an additional problem because now it’s kind of annoying to search the correct screen related to a particular execution?. Having said that, the new proposal is to have the screens, but not all of them, they want to keep those where an error was detected.

Once again, with the first approach not all the callings to the screenshot method should be carried out, then one more time, it should be mandatory to analyze every one of those method callings or, following another approach, where we have just a few callings. For the latter option, now we should implement a try/catch policy, and if there’s an error in the flow, we will capture the screenshot, otherwise the flow will continue without capturing anything. The above is only done in the calls to the actions of the elements.

High Workload in Maintenance

When maintenance comes, then this could be a test for our own automation framework, let’s suppose that locators weren’t declared as class attributes but all of them were declared in every place where they are needed, for example in a function that wants to fill a product registration form.

Then, if for some reason, the UI will be refreshed and most of the locators naturally are changing to a new set, with that situation happening, it’s mandatory to check every single place where there are web element declarations and adjust them to the new locators.

Going back to the product register form, it’s natural that we use the same element several times among different flows, for example, one flow where I fill basic product information but it could be another flow where I want to see the behavior when the whole form is filled, that implies that I have several element declarations in several flows, and some of them can appear in one or more flows, which is bad in maintenance terms.

But, what should have been done from the beginning? The answer is, we should have declared all page locators in a centralized location, avoiding duplicates, then reducing maintenance times.

Flaky Tests

Let’s think about the new features that the QA automation framework should be able to provide in time, and due to that, you realize that sooner than later, you need to refactor part of the framework because the architecture wasn’t validated together with another specialized architect, and now as it is, with probably months of developed test scripts, they will need to be changed.

To give a more clear picture, let’s suppose that architecture doesn’t involve POM (Page Object Model), and now you’re experimenting with an important number of flaky tests, then during analysis, we noticed that wait for elements isn't being used before we want to interact with elements, some of them were added, some weren’t. The easy way to solve it is in fact with the correct use of POM, by providing a wrapper for actions like click() or getText(), just to give a couple of examples, where you internally first check if an element is ready to be used, you get that done by using waits.

Probably to solve definitely the described situation will be needed to include a POM that has a BasePage class where will be used the waits, then all pages in the framework should have for parents to this BasePage, in this way all of them will have access to this functionality.

The long way and really tedious way will be, in each script check all actions, then be sure that before every interaction is included a wait, but this is a long task, and for sure will duplicate several times the same piece of code, that in future could represent the same issue if wait approach still continues to represent any issue.

Additional Manual Testing Effort to Isolate Root Cause of Failed Tests

Sometimes it could be hard to isolate which is the root cause of failing in my automated test cases, could it be because of waits? Could it be because of a new defect? Could it be because of the data that we’re using in our tests?

The architectural decisions can have a big impact on the future if our data is fixed in each test case, instead of being configured in external files, that can be easily modified without the need of modifying the source code could make a set of tests unusable, then the only way to test data changes is to do it manually, which is time-consuming.

Considerations

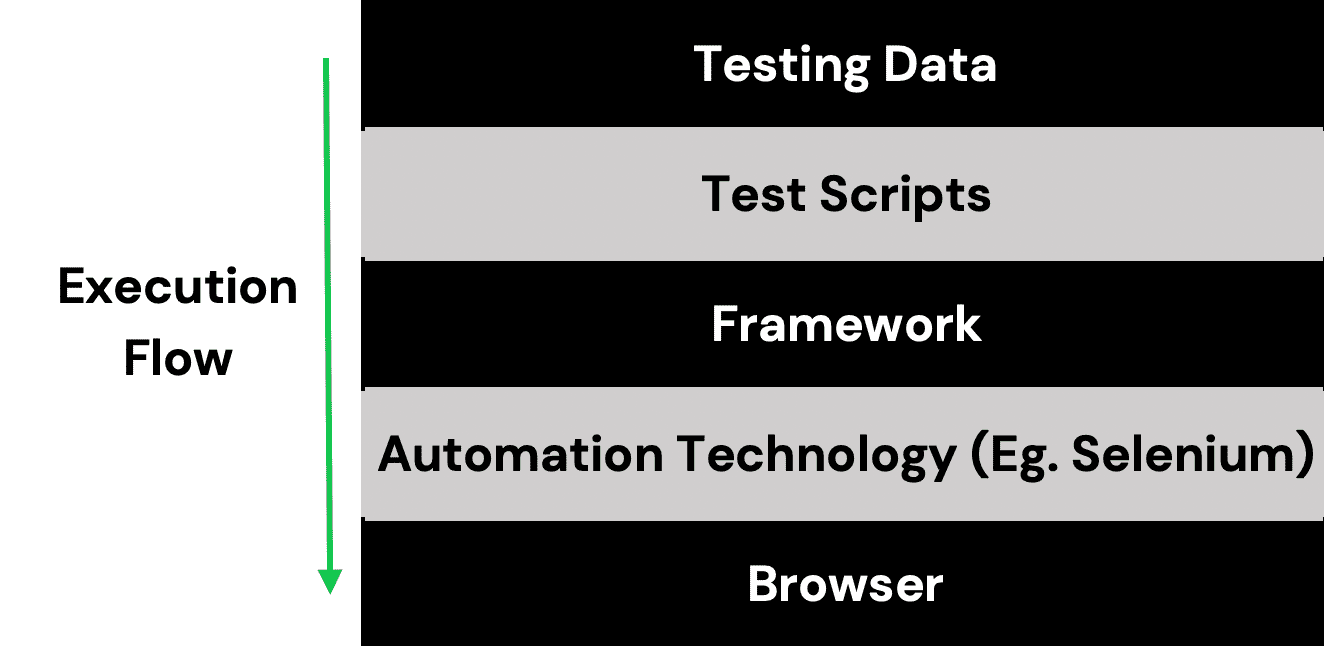

Having exposed above some of the most typical situations where refactors, the extra time, and all kinds of undesired effects due to bad architectural decisions, there is a good practice where a testing automation solution should follow a multi-layered architecture as follows:

Each layer just interacts with the following next, for example, test scripts will just interact with testing data, and also with the testing framework, that is, with the functionality provided by pages in a POM pattern approach.

Final thoughts

Specify a solid QA architecture, then you will be able to deliver fast and reliable QA results, and also you will be drawing down costs because of the reduction in manual testing execution. On the other hand, don’t put too much attention to the architectural details and potentially throw to the trash all the advantages of automation testing.

Have in mind that an automated testing QA framework development should follow almost the same phases of a regular software development project, for sure it will have planning, design/validation phases, where all those important architectural conversations should be had with stakeholders.

If you’d like to learn more about our QA solutions, reach out to us today!